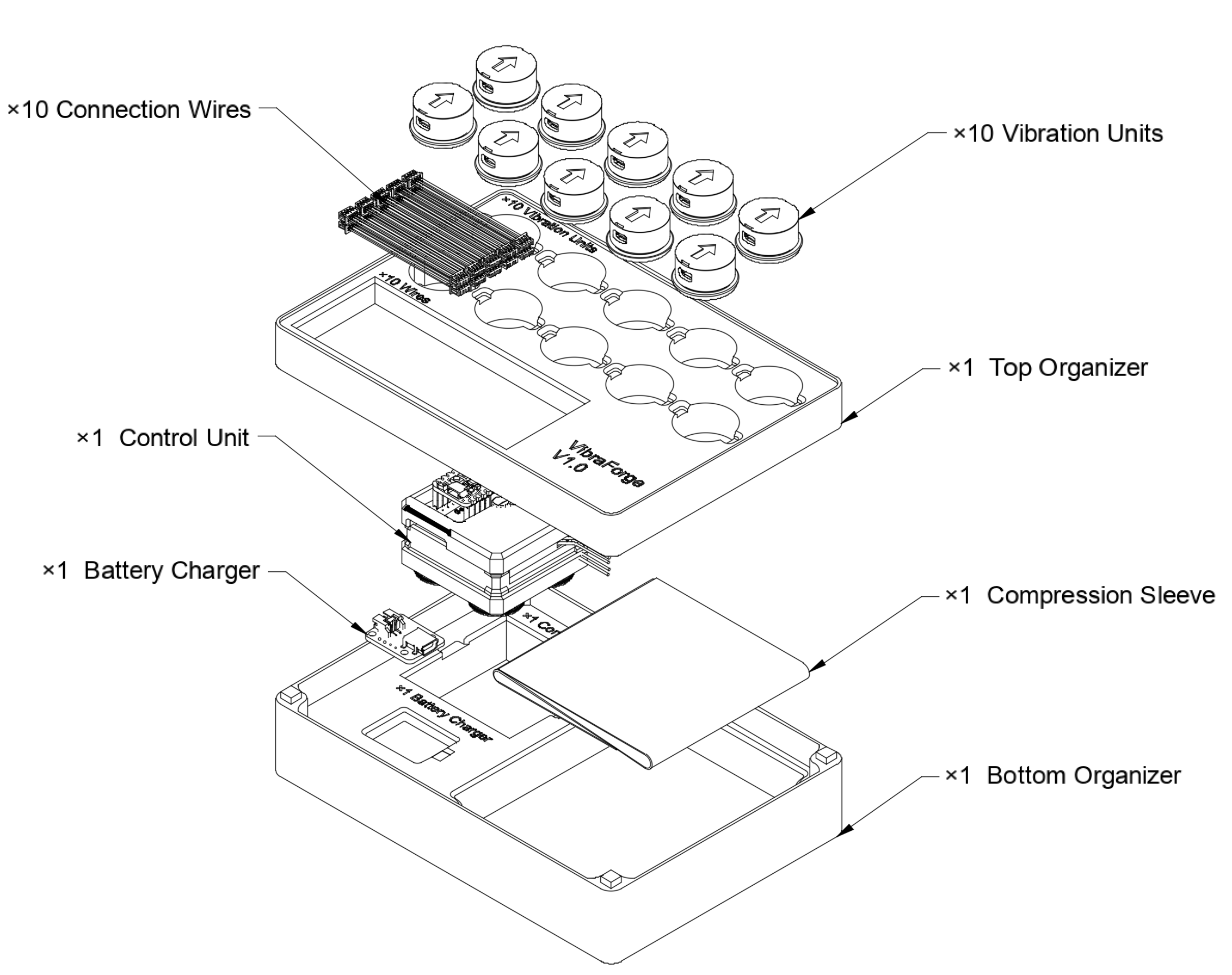

Features

Minimal hardware, modular architecture, and expressive tactile capabilities — designed for rapid R&D and premium user experience.

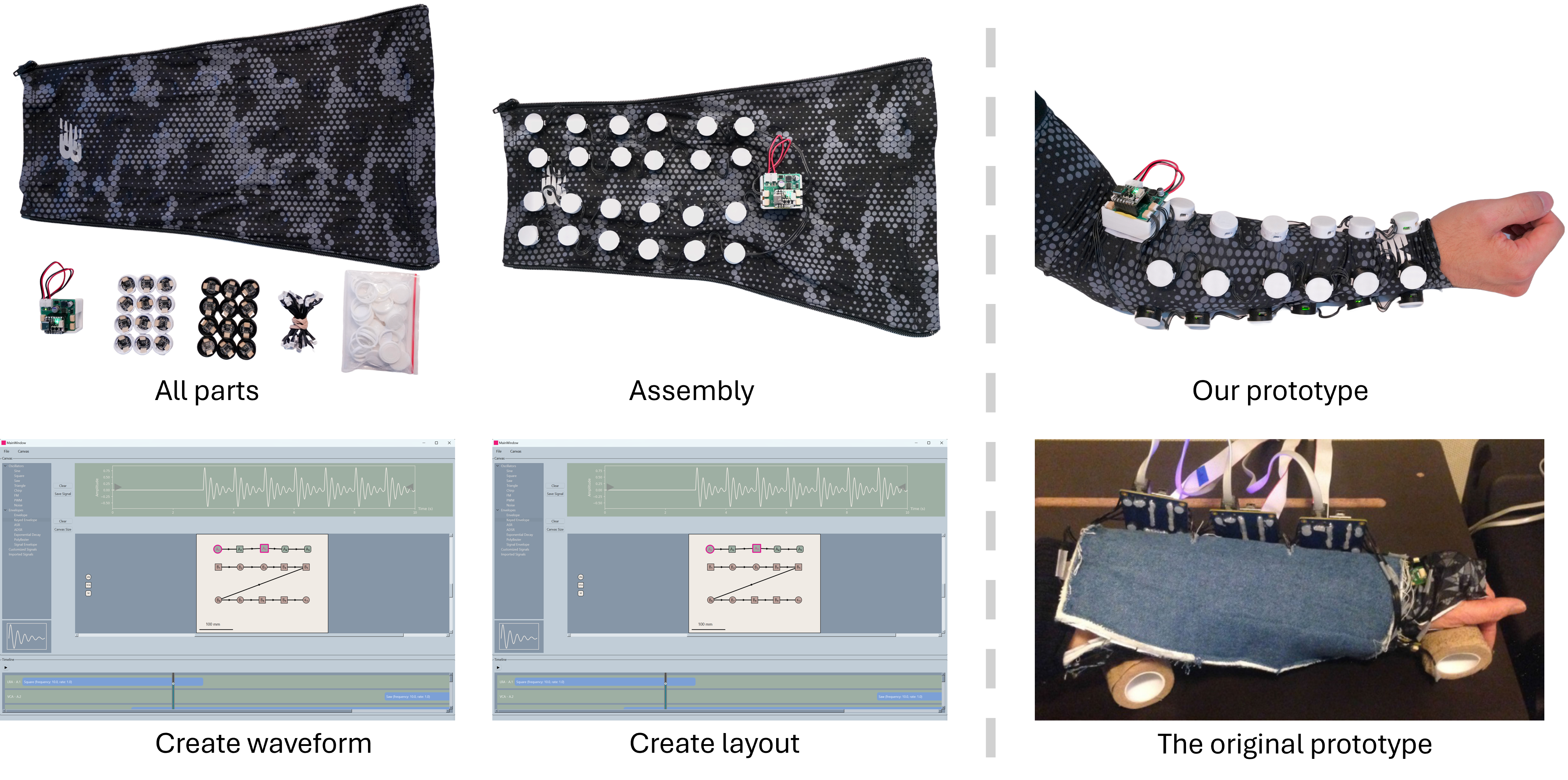

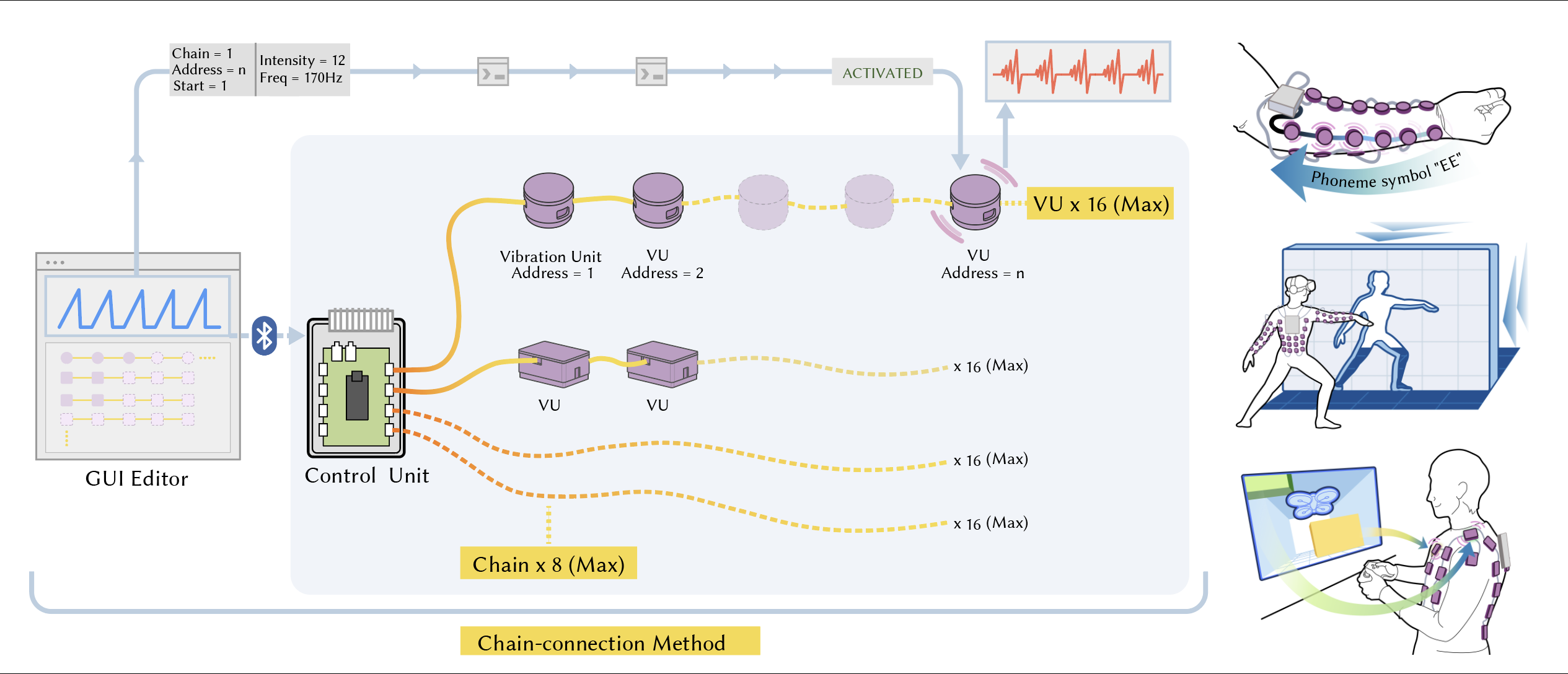

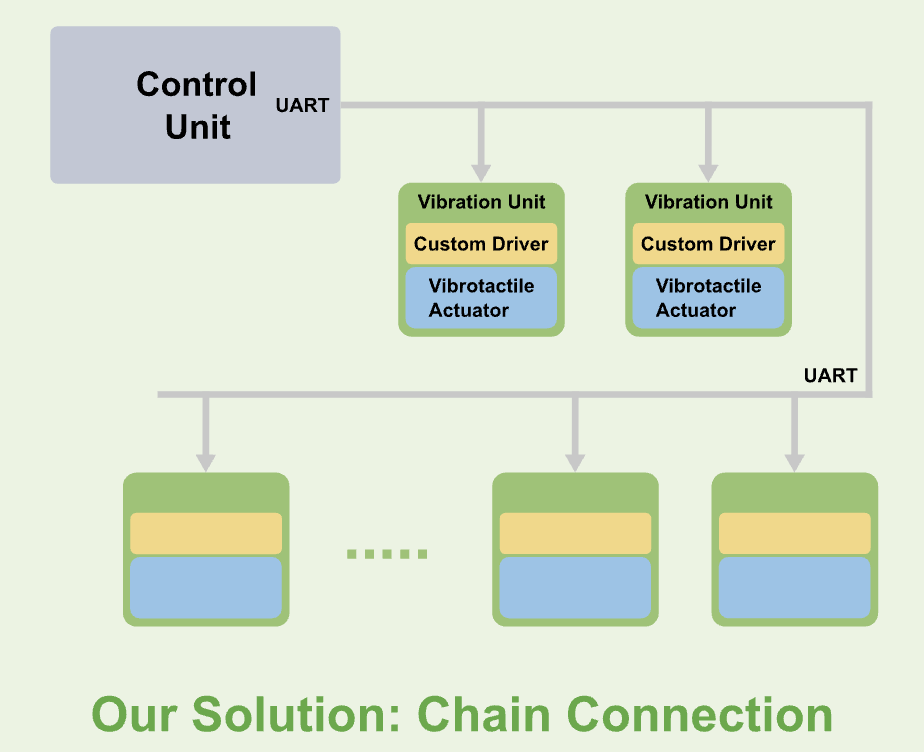

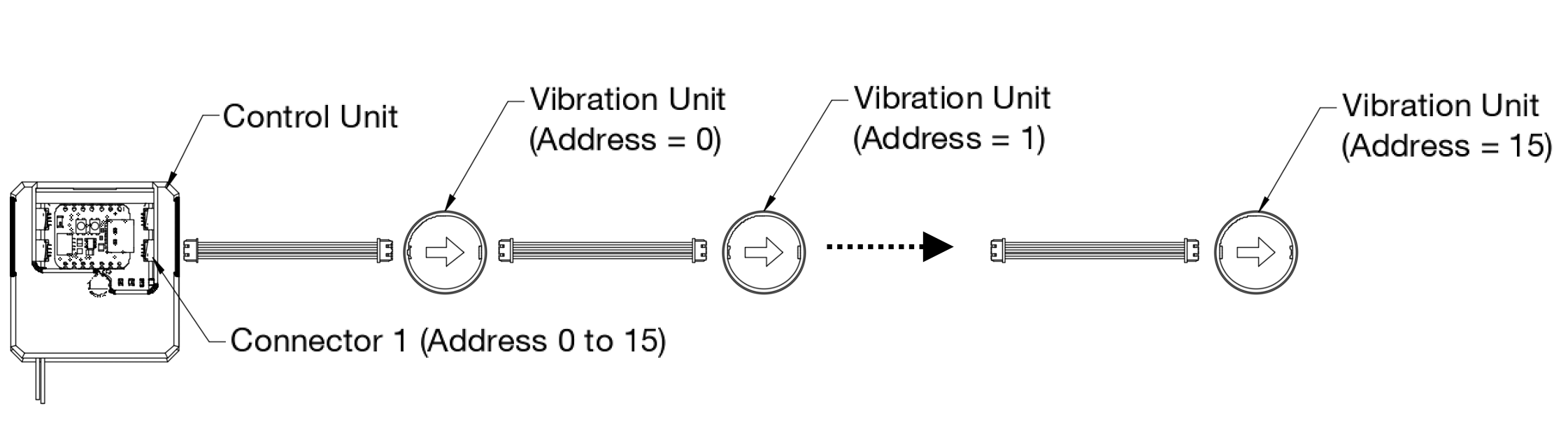

128-Actuator Architecture

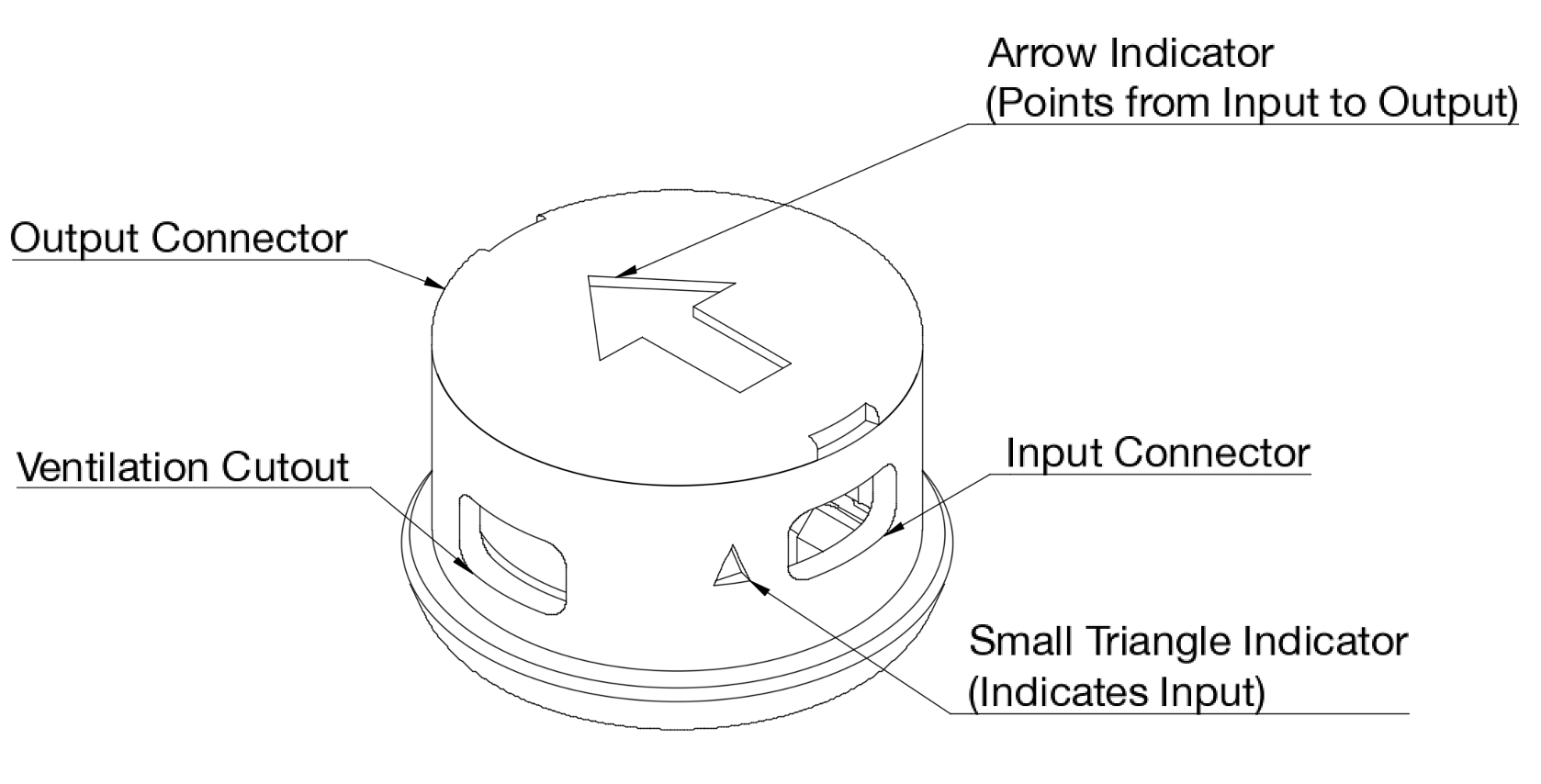

8 chains × 16 units each — clean, scalable, modular.

Per-Unit Microcontrollers

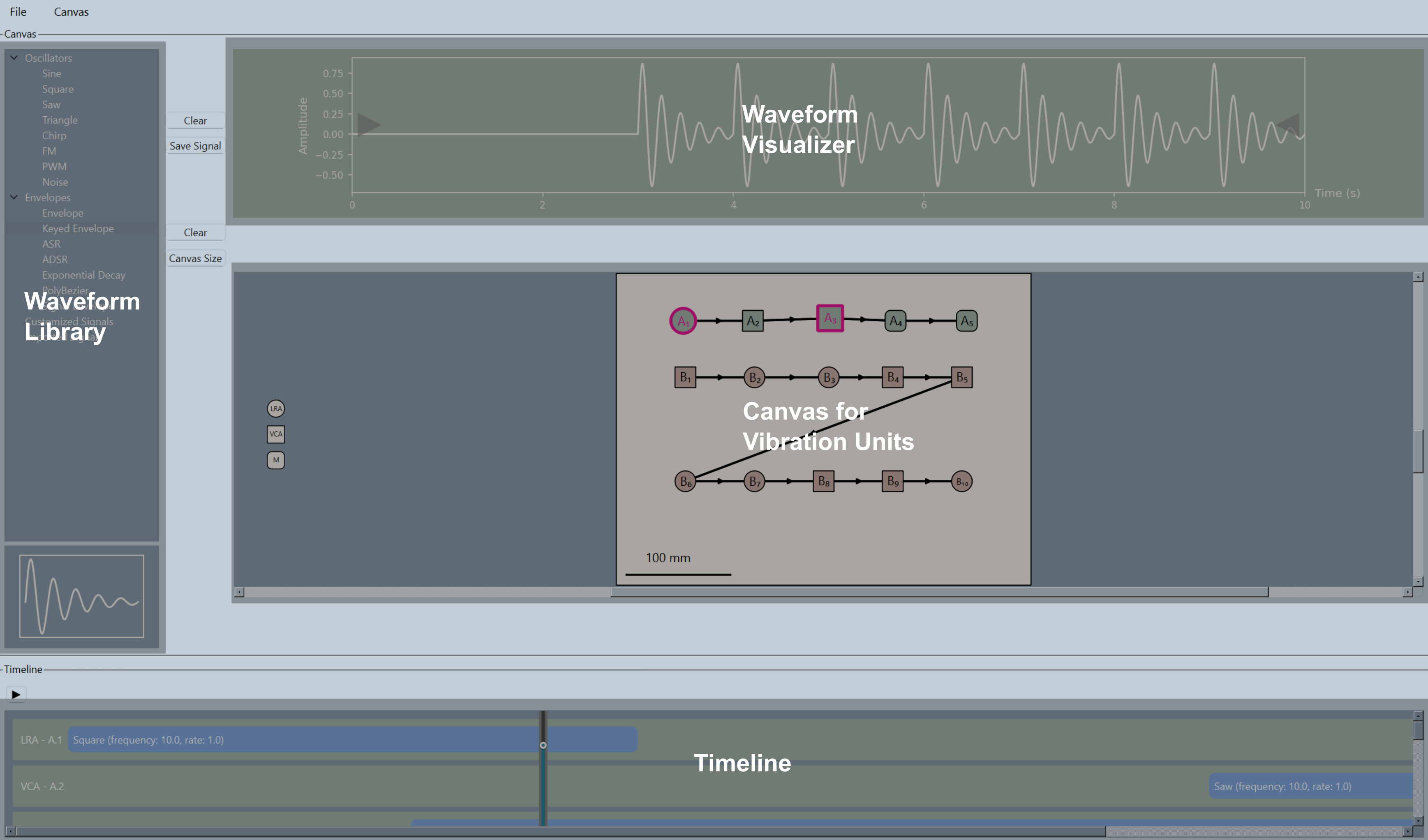

Local waveform synthesis for crisp timing + low latency.

Fine-Grained Frequency & Intensity

16 intensity levels + multi-band frequencies for expressive patterns.

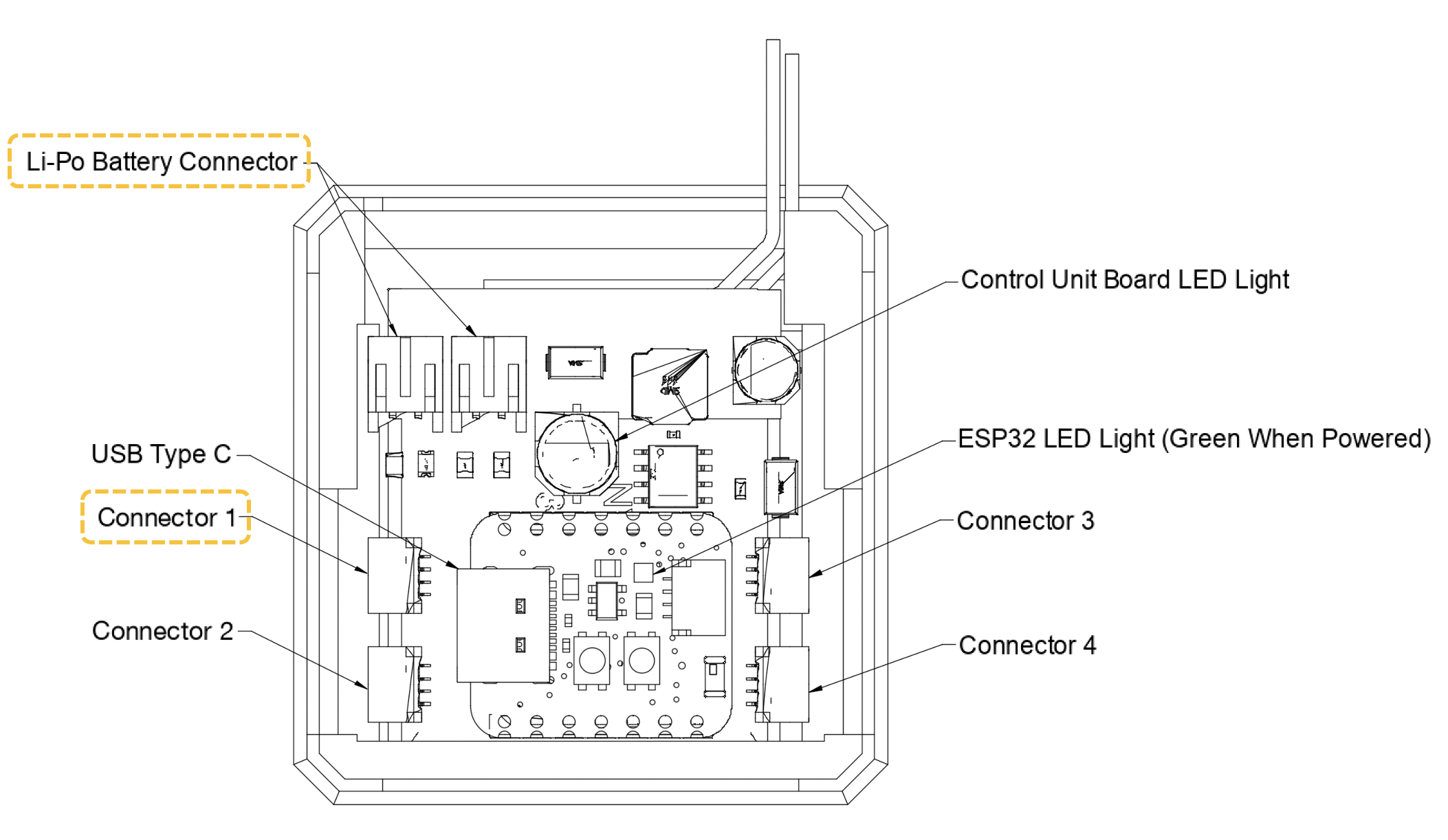

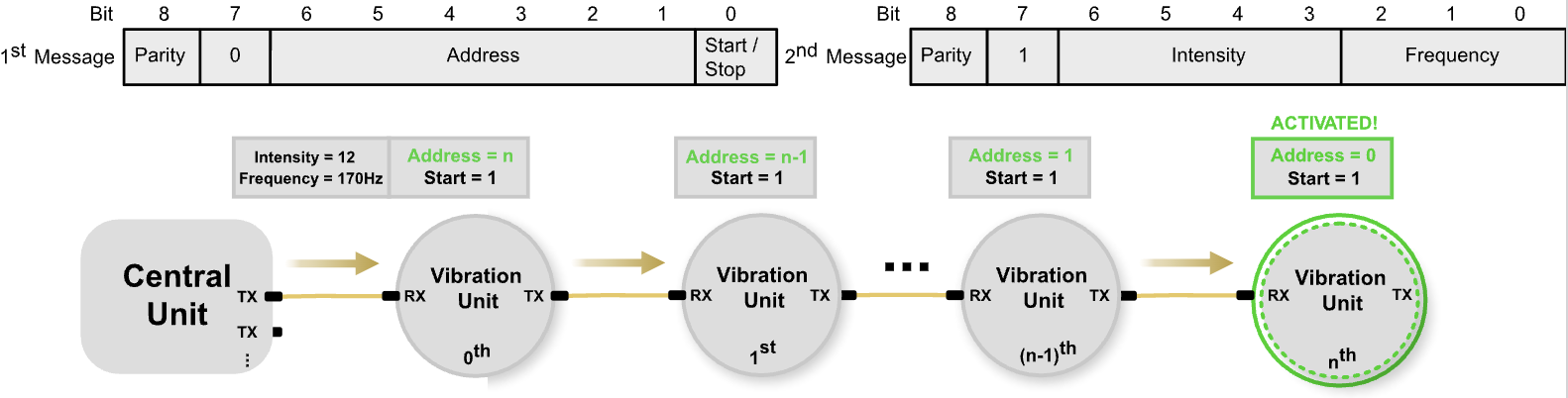

BLE + UART Hybrid Pipeline

BLE for high-level patterns; UART for chain-level distribution.

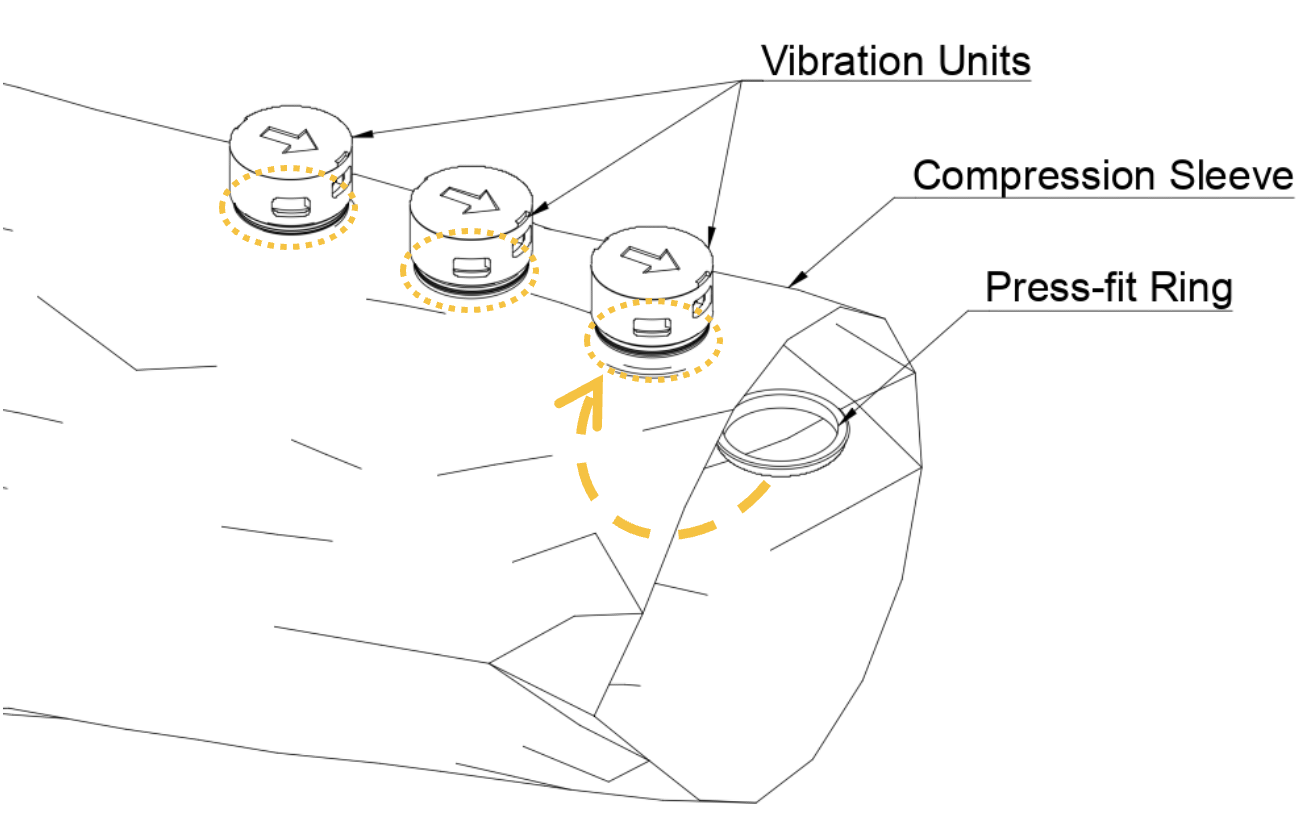

Modular Wearable Design

Snap-fit actuators — no sewing needed.

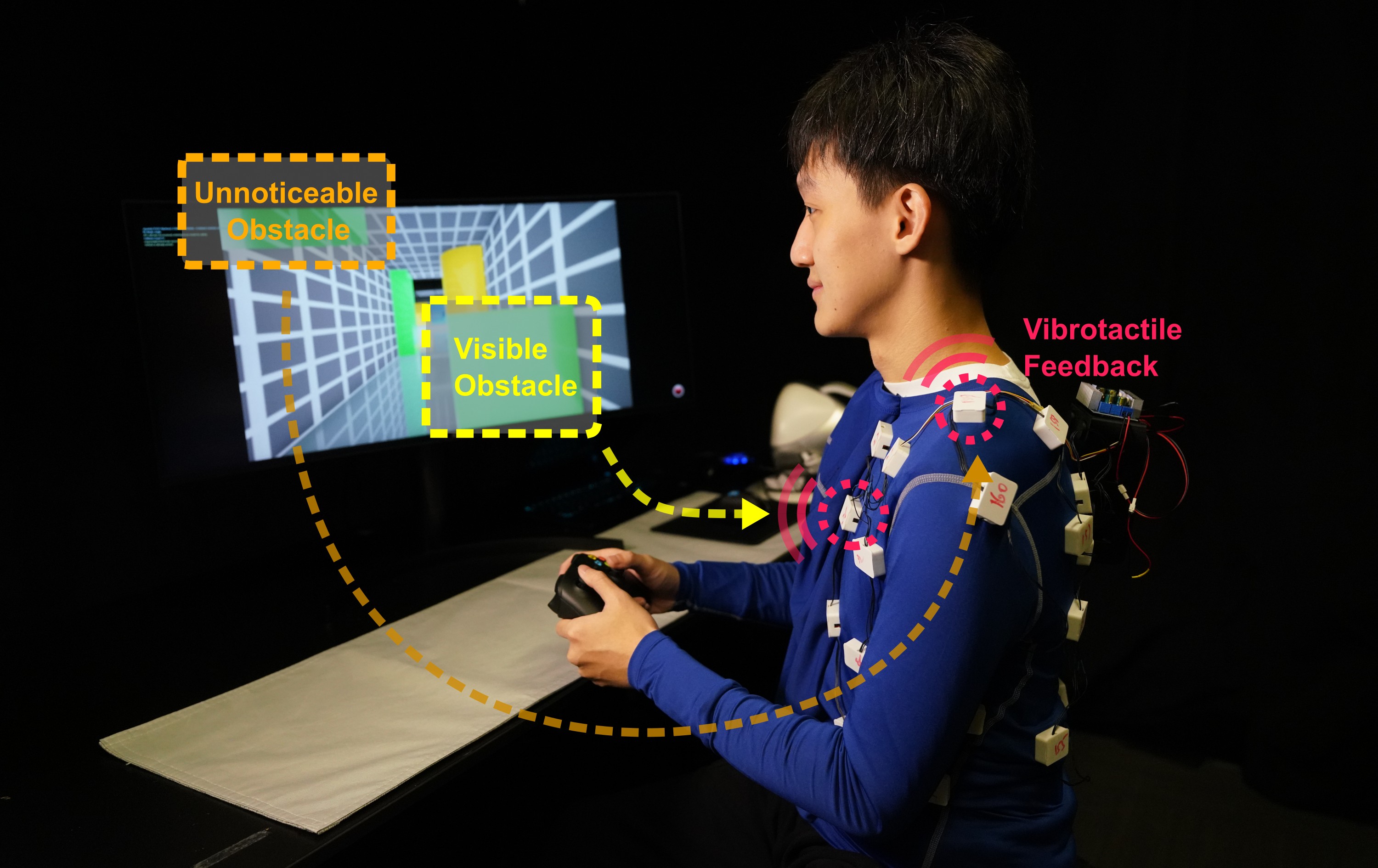

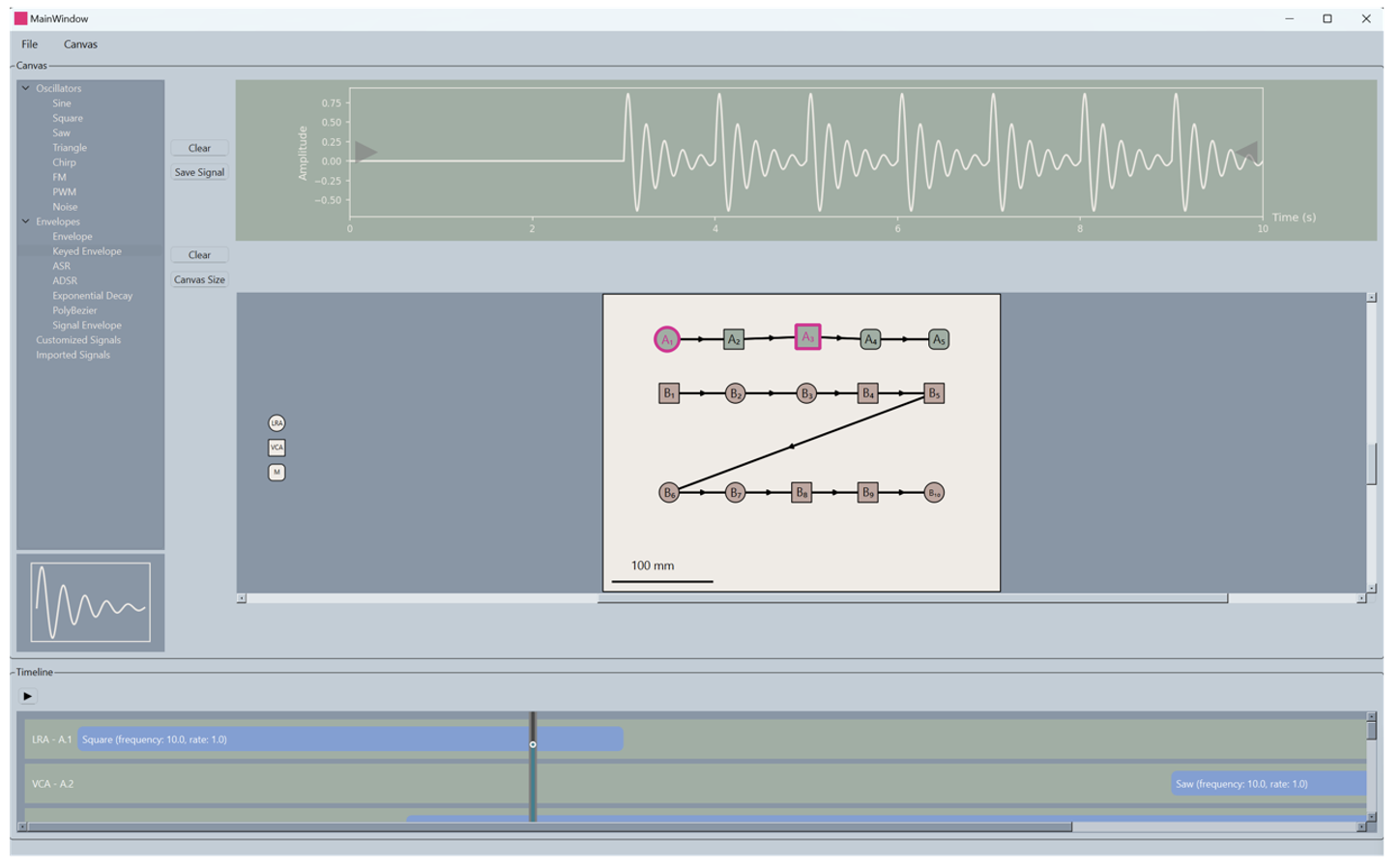

Toolkit for R&D

Ideal for VR, illusions, phoneme mapping, and robotics feedback.